PagedOut Issue 2 : What if - Infinite Malloc

This article was published in the PagedOut in the Issue 2. This is the web version of this article.

What If - We tried to malloc infinitely ?

Have you ever wondered what might happen if you tried to allocate infinite memory with malloc in C on Linux? Let’s find out.

DISCLAIMER: This experiment can be harmful to your system. Run it only in a virtual machine or on a computer dedicated to testing!

Proof of Concept

In order to investigate our idea, here is a simple while(1) infinite loop, allocating new memory at each turn. It is necessary to set the first char of our new allocated memory, to be sure that it is kept as is and is really given to our program.

#include <stdlib.h>

#include <stdio.h>

//gcc -Wall infmalloc.c -o infmalloc

int main() {

long long int k = 0;

while (1) {

// Allocates new memory

char * mem = malloc(1000000);

k += 1;

// Use the allocated memory

// to prevent optimization

// of the page

mem[0] = '\0';

printf("\rAllocated %lld", k);

}

return 0;

}

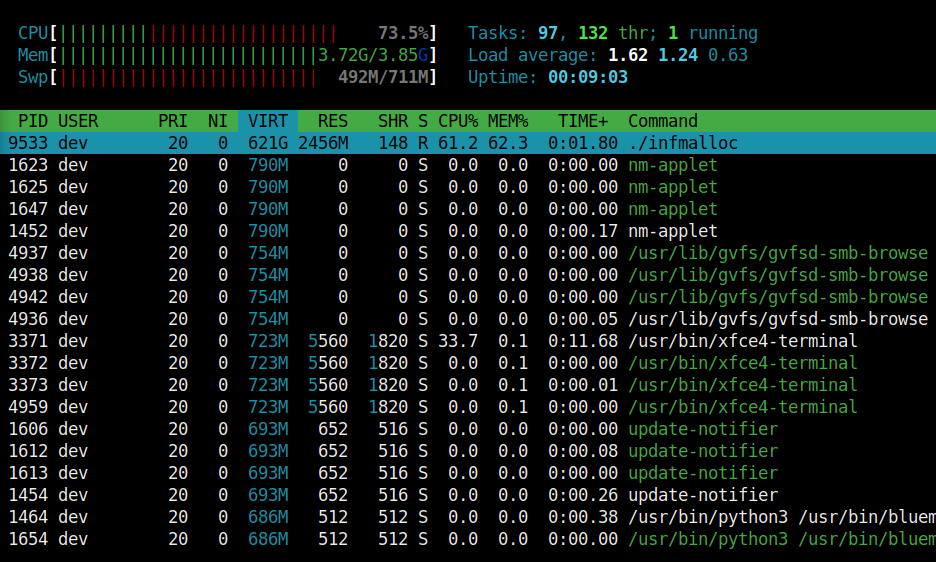

We can now compile it and run it. The first time I ran this, my computer crashed. I ran it a second time with htop running on the same machine, in order to track how much virtual memory we were able to allocate:

Wow, 621 GB of virtual memory! That is more than the sum of capacities of my RAM and my hard drive! So, what is going on here?

What is happening?

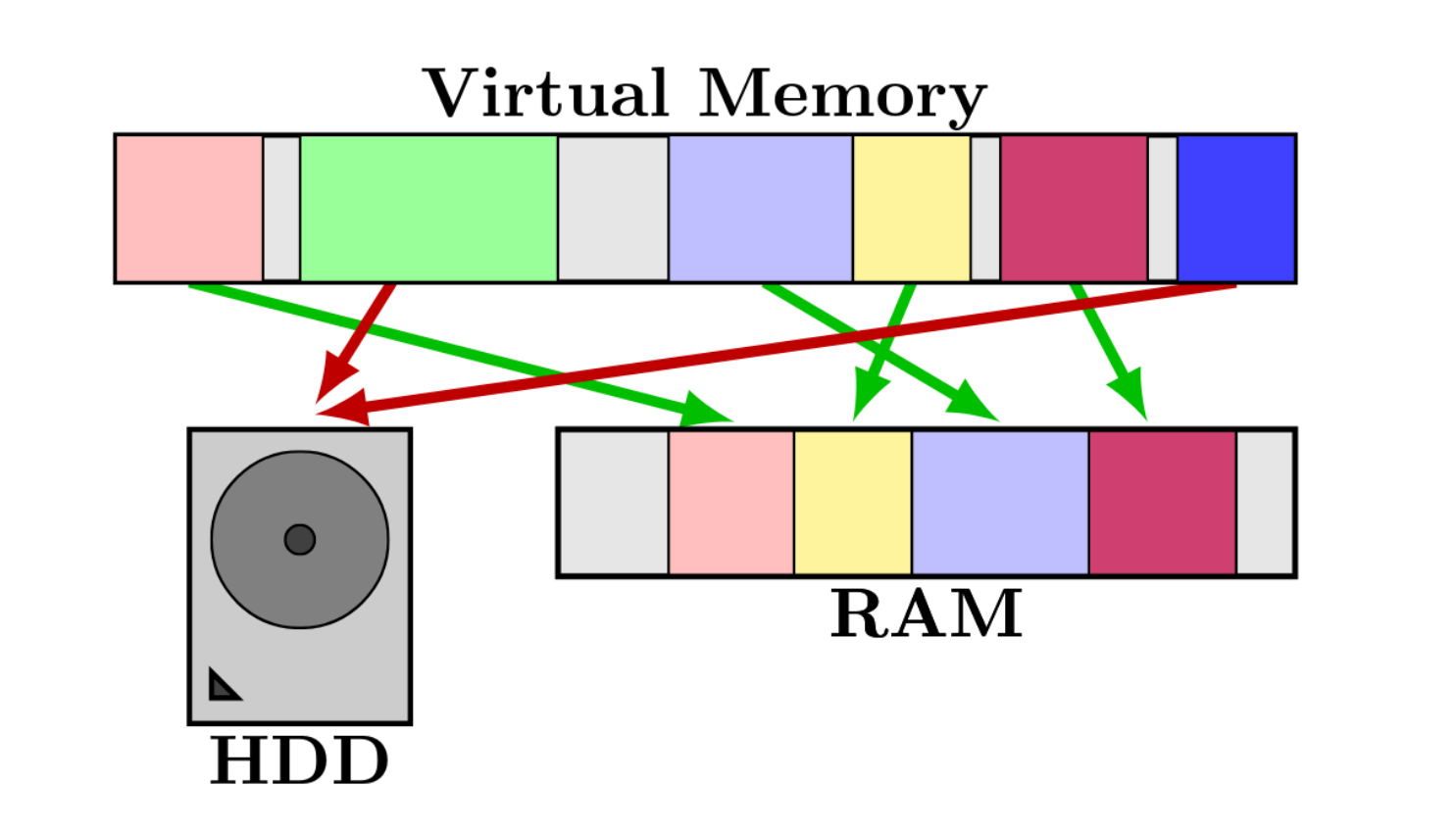

At first, our new allocated memory pages are created directly in RAM as long as there is enough space. At some point we will run out of space in RAM, so the last recently used pages (LRU Algorithm) will be moved to the swap, located onto hard disk in order to be able to write the new allocated pages to RAM. Our allocated virtual memory is now bigger than the RAM, this is called memory overcommit. It raises two problems:

-

Firstly, our program creates pages at extremely fast speed in the virtual memory address space.

-

Secondly, writing something to hard disk is extremely slow compared to writing to RAM. New pages to write to disk are pushed into an asynchronous queue waiting for the disk to write them.

Here is a scheme of the blocking configuration:

After a few seconds, there is so much pages to move to disk that the operating system will freeze waiting for the disk to write them. This creates a denial of service!

Protections

Fortunately, there are ways to prevent this kind of attacks/bugs. You can use ulimits or cgroups to set the maximum amount of virtual memory that a process can allocate.

You can view the currently set limit with ulimit -a (on most systems, it is unlimited by default).

You can set the maximum amount of virtual memory with ulimit -v. ulimit -v takes a value in KiB, while malloc() takes it in bytes. Be careful of what you do though, if do a ulimit -v 1 a lot of things will break due to failed memory allocations (such as sudo, ulimit, …)!

Conclusions

We have seen that an infinite loop of malloc can create a denial of service by freezing the computer. In order to protect a system from such attacks or program bugs, one can set the maximum amount of virtual memory through ulimit -v VALUE or cgroups.

References

- PagedOut Issue 2 : https://pagedout.institute/download/PagedOut_002_beta2.pdf

- https://stackoverflow.com/questions/13921053/malloc-inside-an-infinte-loop

- https://www.win.tue.nl/~aeb/linux/lk/lk-9.html#ss9.6

- http://man7.org/linux/man-pages/man1/ulimit.1p.html

- http://man7.org/linux/man-pages/man7/cgroups.7.htm