Scraping search APIs - Depth first style

Introduction

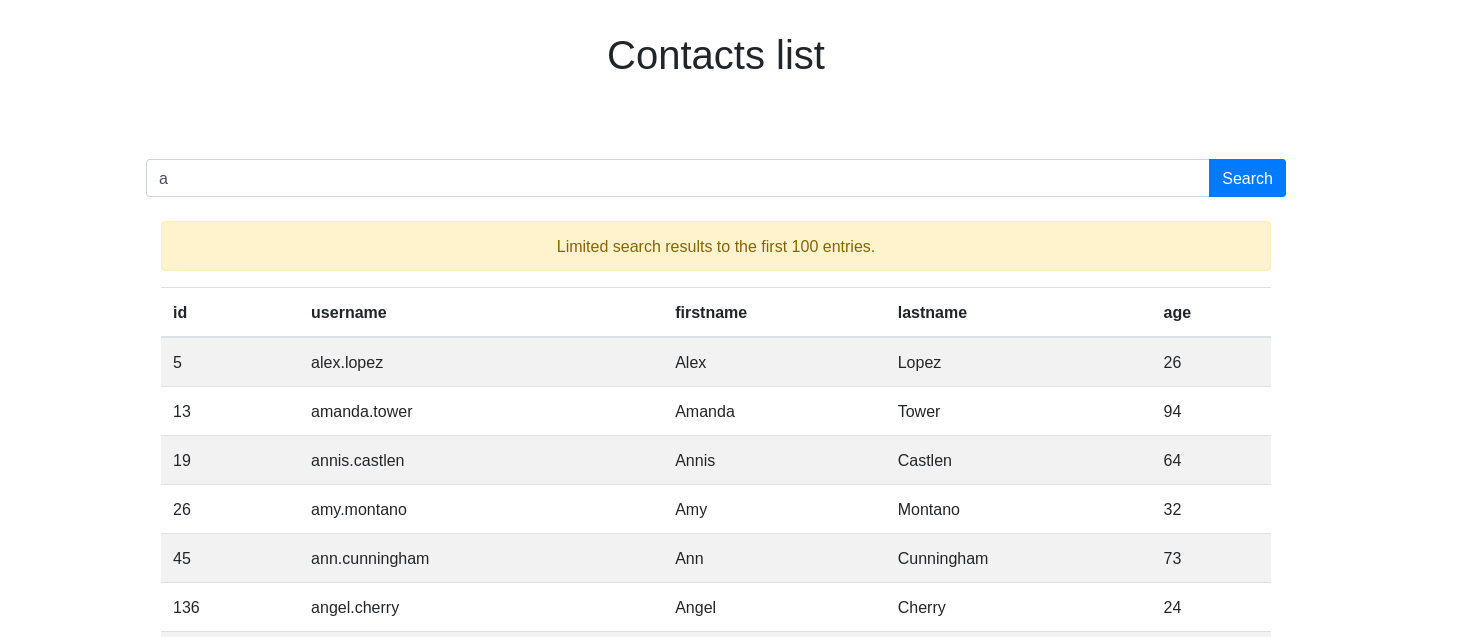

More and more web applications are divided into two parts. A frontend displaying the data to the user, and a backend in the form of an API sending this data. Today we’re going to take a look at these backend APIs and see how to extract database content optimally.

During a pentest or a redteam, it is very interesting to know the list of users of an application (to obtain access with weak passwords for example). This list can also be a subset or all of the company’s Windows domain accounts. During the test, you may have found an application with a functionality to search for a user in the directory.

This is a promising entry point for building a user list, but the API limits the response to the first 100 results. So how do you do it?

Test application

I have prepared a test web application for you to use with this article (download the source).

Launch of the application

To start the application, you have to install the necessary python packages with pip:

python3 -m pip install -r requirements.txt

Once the packages are installed, you can launch the application with the following command:

python3 app.py

The web application is then accessible at http://127.0.0.1:5000/.

This application allows you to search for a user in the company directory. It will only returns the first 100 results.

Generating a random dataset

If you want, you can use the create_db.py script to generate a random dataset (last name, first name, username, age) to populate the API database.

$ ./create_db.py -h

usage: create_db.py [-h] [-f FILE] [-n NUMBER] [-v]

Description message

optional arguments:

-h, --help show this help message and exit

-f FILE, --file FILE arg1 help message

-n NUMBER, --number NUMBER

Number of entries to generate

-v, --verbose Verbose mode

Quickly extract data from the API

In the test application, search requests are sent to the url /api/search/<query>. This URL returns the result of the query as a JSON containing message field and a results list containing the search results like this:

{

"message": "2 results.",

"results": [

{

"id": 1616,

"age": 23,

"firstname": "Abigail",

"lastname": "Sanchez",

"username": "abigail.sanchez"

},

{

"id": 1990,

"age": 45,

"firstname": "Abdul",

"lastname": "Hays",

"username": "abdul.hays"

}

]

}

The fastest way to extract all the data from the API is to perform a depth first search.

Depth first search

We will iterate through the API using a depth first search to extract the entire database. When we send a request to the API we can have two types of responses:

- More than 100 results: The answer is truncated to the first 100 results.

- Less than 100 results: We have all the data of the response to the query.

In the second case, we are certain to have all the response data for the request if the number of results is less than the limit set by the API (in the example application this limit is 100 results).

From these observations, we can create a simple decision tree:

- Send a request to the API:

- If Number of results >= 100: Refine the query.

- Otherwise Number of results <100: Store the results and go to the next query.

Here is an animation showing the path of the API graph and the number of request results:

We now have to write a script allowing to perform this depth first search on the API and store the results.

Final result

Once the script is finished, we can extract the 20,000 users in 4min 45seconds without prior knowledge on usernames.